Pentesting AWS Enviroments

Attacking AWS Accounts from a black box perspective

I spend alot of time reviewing the configuration of AWS accounts from a white box perspective (hence snotra and snotra.cloud which affords you complete visibility of all resources in the account. However, this post will focus on attacking AWS from a black box perspective, where you might only have a static S3 hosted website, a bucket name or an account ID for example. I will endeavour to keep this page updated as new black box techniques are discovered.

S3 Static Website Hosting

All S3 hosted websites are aliased in DNS to an AWS subdomain

Resolve the IP address of the websites hostname

$ host flaws.cloud flaws.cloud has address 52.92.242.131 flaws.cloud has address 52.92.187.51 flaws.cloud has address 52.218.216.226 flaws.cloud has address 52.92.165.179 flaws.cloud has address 52.92.178.3 flaws.cloud has address 52.92.137.179 flaws.cloud has address 52.92.233.203 flaws.cloud has address 52.218.220.218

Reverse lookup the IP, if the site is hosted in S3 it will resolve to s3-website-<region>.amazonaws.com

$ host 52.92.242.131 131.242.92.52.in-addr.arpa domain name pointer s3-website-us-west-2.amazonaws.com.

Browsing to <bucket_name>.s3-website-<region>.amazonaws.com will also take you to the site e.g. http://flaws.cloud.s3-website-us-west-2.amazonaws.com/

Bucket Listing

If the bucket hosting the website grants list access to everyone the content of the site can be viewed, in the browser by visiting http://<bucketname>.s3.amazonaws.com/, e.g.

$ curl http://flaws.cloud.s3.amazonaws.com/ -q | xq <?xml version="1.0" encoding="UTF-8"?> <ListBucketResult xmlns="http://s3.amazonaws.com/doc/2006-03-01/"> <Name>flaws.cloud</Name> <Prefix/> <Marker/> <MaxKeys>1000</MaxKeys> <IsTruncated>false</IsTruncated> <Contents> <Key>hint1.html</Key> <LastModified>2017-03-14T03:00:38.000Z</LastModified> <ETag>"f32e6fbab70a118cf4e2dc03fd71c59d"</ETag> <Size>2575</Size> <StorageClass>STANDARD</StorageClass> </Contents> <Contents> <Key>hint2.html</Key> <LastModified>2017-03-03T04:05:17.000Z</LastModified> <ETag>"565f14ec1dce259789eb919ead471ab9"</ETag> <Size>1707</Size> <StorageClass>STANDARD</StorageClass> </Contents> <Contents> <Key>hint3.html</Key> <LastModified>2017-03-03T04:05:11.000Z</LastModified> <ETag>"ffe5dc34663f83aedaffa512bec04989"</ETag> <Size>1101</Size> <StorageClass>STANDARD</StorageClass> </Contents> <Contents> <Key>index.html</Key> <LastModified>2020-05-22T18:16:45.000Z</LastModified> <ETag>"f01189cce6aed3d3e7f839da3af7000e"</ETag> <Size>3162</Size> <StorageClass>STANDARD</StorageClass> </Contents> <Contents> <Key>logo.png</Key> <LastModified>2018-07-10T16:47:16.000Z</LastModified> <ETag>"0623bdd28190d0583ef58379f94c2217"</ETag> <Size>15979</Size> <StorageClass>STANDARD</StorageClass> </Contents> <Contents> <Key>robots.txt</Key> <LastModified>2017-02-27T01:59:28.000Z</LastModified> <ETag>"9e6836f2de6d6e6691c78a1902bf9156"</ETag> <Size>46</Size> <StorageClass>STANDARD</StorageClass> </Contents> <Contents> <Key>secret-dd02c7c.html</Key> <LastModified>2017-02-27T01:59:30.000Z</LastModified> <ETag>"c5e83d744b4736664ac8375d4464ed4c"</ETag> <Size>1051</Size> <StorageClass>STANDARD</StorageClass> </Contents> </ListBucketResult>

or via the cli, e.g.

$ aws s3 ls flaws.cloud --no-sign-request 2017-03-14 03:00:38 2575 hint1.html 2017-03-03 04:05:17 1707 hint2.html 2017-03-03 04:05:11 1101 hint3.html 2020-05-22 18:16:45 3162 index.html 2018-07-10 16:47:16 15979 logo.png 2017-02-27 01:59:28 46 robots.txt 2017-02-27 01:59:30 1051 secret-dd02c7c.html $ aws s3 ls mega-big-tech --no-sign-request PRE images/

Recovering Account ID

Once you have a bucket name it is possible to obtain the ID for the AWS Account hosting the bucket.

Firstly you need a role in an AWS account you control and can assume with the following permissions:

- s3:GetObject

- S3:ListBucket

Once you have set up the role and are able to assume it the tool s3-account-search can be use to recover the account ID:

$ s3-account-search arn:aws:iam::747806891254:role/s3-account-search mega-big-tech Starting search (this can take a while) found: 1 found: 10 found: 107 found: 1075 found: 10751 found: 107513 found: 1075135 found: 10751350 found: 107513503 found: 1075135037 found: 10751350379 found: 107513503799

Region

As well as in the URL the region of the bucket can also be found in the "x-amz-bucket-region" HTTP header.

$ curl http://flaws.cloud.s3.amazonaws.com/ -i HTTP/1.1 200 OK x-amz-id-2: IwojjzeCXRCG6a3rfYf4iTdDV3bQwmeDjCIYuHTEld3F8fX9VCqumbbxnvHWr0UEGImIq3+fnkA= x-amz-request-id: Y8S0FKWPD4HCS755 Date: Wed, 31 Jan 2024 14:47:44 GMT x-amz-bucket-region: us-west-2 Content-Type: application/xml Transfer-Encoding: chunked Server: AmazonS3

Account Aliases

It is also possible to identify potential accounts by brute-forcing accounts aliases.

curl -v https://<acount_id>.signin.aws.amazon.com curl -v https://<aliase>.signin.aws.amazon.com

If you get an HTTP 200 response then the account exists.

This tool can be used to automate the process.

./validate_accounts.py -i /tmp/accounts.txt -o /tmp/out.json

Account ID (API Gateway, Lambda, DataExchange)

You can also recover accounts IDs from API Gateways, Lambda Functions and DataExchange Datasets (plus likely many more)

Public AMIs

Once an Account ID is obtained this can be used to see if there are any public Amazon Machine Images available for pillaging.

This can be easily done via the AWS Web Console, EC2 > AMIs > select "Public Images" in drop down menu > search by "owner" and enter the account ID.

or via the CLI:

$ aws ec2 describe-images --owners 807659967283 --region us-east-2 { "Images": [ { "Architecture": "x86_64", "CreationDate": "2022-10-07T15:12:48.000Z", "ImageId": "ami-0426fc5dbcbd7067b", "ImageLocation": "807659967283/matillion-etl-for-redshift-ami-1.66.20-byol-07-10-2022-14h-42m", "ImageType": "machine", "Public": true, "OwnerId": "807659967283", ...

Launch an instance from any public AMIs you find and search the disk for sensitive information including Access Keys, SSH Keys and Passwords.

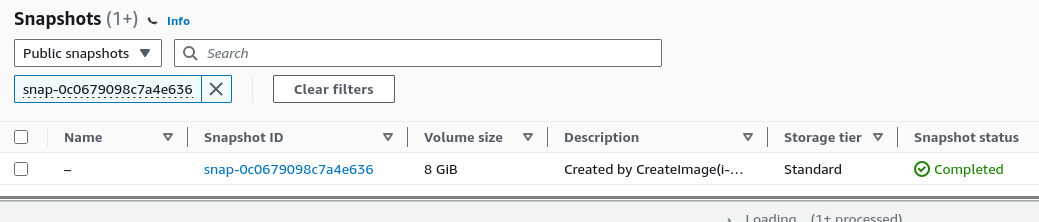

Public EBS Snapshots

Just like AMIs, EBS snapshots can also be public and should be checked for sensitive information.

In the web console, navigate to EC2 > Snapshots > Select "public snapshots" in the drop down menu > search "owner" and enter account id.

or via the CLI:

$ aws ec2 describe-snapshots --owner-ids 104506445608 --profile default --region us-east-1 { "Snapshots": [ { "Description": "Created by CreateImage(i-06d9095368adfe177) for ami-07c95fb3e41cb227c", "Encrypted": false, "OwnerId": "104506445608", "Progress": "100%", "SnapshotId": "snap-0c0679098c7a4e636", "StartTime": "2023-06-12T15:20:20.580000+00:00", "State": "completed", "VolumeId": "vol-0ac1d3295a12e424b", "VolumeSize": 8, "Tags": [ { "Key": "Name", "Value": "PublicSnapper" } ], "StorageTier": "standard" }, { "Description": "Created by CreateImage(i-0199bf97fb9d996f1) for ami-0e411723434b23d13", "Encrypted": false, "OwnerId": "104506445608", "Progress": "100%", "SnapshotId": "snap-035930ba8382ddb15", "StartTime": "2023-08-24T19:30:49.742000+00:00", "State": "completed", "VolumeId": "vol-09149587639d7b804", "VolumeSize": 24, "StorageTier": "standard" } ] } or aws ec2 describe-snapshots --owner-id self --restorable-by-user-ids all --no-paginate

Create a volume from any snapshots you find, launch an EC2 instance, attach the newly created volume, mount it and search for sensitive content.

The brilliant Pacu has a module "ebs__enum_snapshots_unauth" for this.

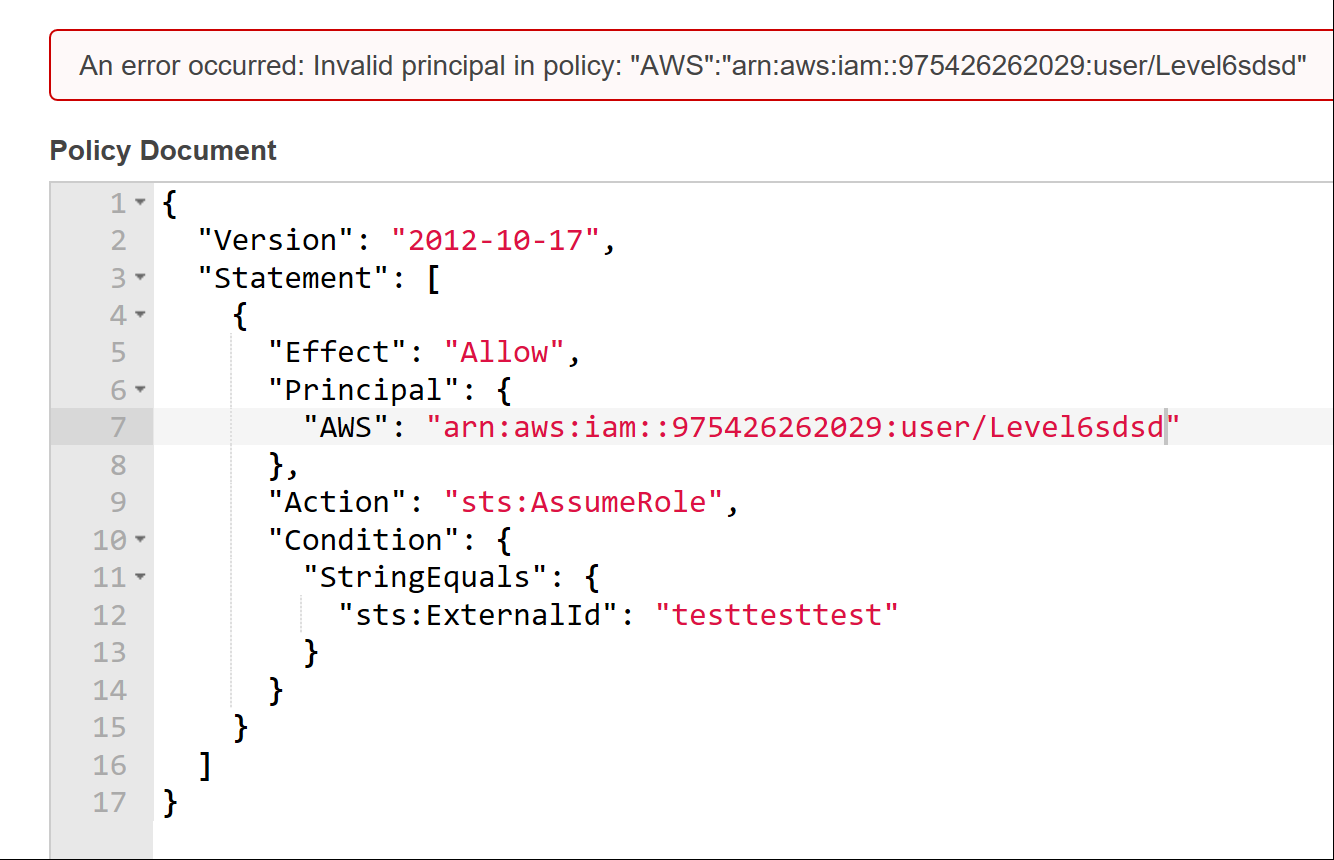

User Enumeration

Once an Account ID has been obtained it is possible to enumerate IAM users.

- Create a role in your account with no permissions

- Set trusted entity as the target AWS account

- Edit the roles trust and enter user name you want to validate as the trusted principle

- If user does not exist error is returned

- If the policy saves then the user exists in the account.

This process has been automated in the Pacu in the "iam__enum_users" module.

Pacu (aws:imported-mfa) > exec iam__enum_users --role-name arn:aws:iam::707306871275:role/user-enum --account-id 099518971931 --word-list users Running module iam__enum_users... [iam__enum_users] Warning: This script does not check if the keys you supplied have the correct permissions. Make sure they are allowed to use iam:UpdateAssumeRolePolicy on the role that you pass into --role-name! [iam__enum_users] Targeting account ID: 099518971931 [iam__enum_users] Starting user enumeration... [iam__enum_users] Found user: arn:aws:iam::099518971931:user/sec1user [iam__enum_users] Found 1 user(s): [iam__enum_users] arn:aws:iam::099518971931:user/secuser [iam__enum_users] iam__enum_users completed. [iam__enum_users] MODULE SUMMARY: 1 user(s) found after 4 guess(es).

Role Enumeration

Once an Account ID has been obtained it is possible to enumerate roles and if you are lucky assume them!

Once method is to try assume a role in another account. The error message can be used to infer if the role exists or not.

aws sts assume-role --role-arn arn:aws:iam::<accountid>:role/<rolename> --role-session-name <sessonname>

Role exists An error occurred (AccessDenied) when calling the AssumeRole operation: User: arn:aws:iam::012345678901:user/MyUser is not authorized to perform: sts:AssumeRole on resource: arn:aws:iam::111111111111:role/aws-service-role/rds.amazonaws.com/AWSServiceRoleForRDS

Role does not exist An error occurred (AccessDenied) when calling the AssumeRole operation: Not authorized to perform sts:AssumeRole

Any cross-account roles granting access to all principals with "AWS": "*" can be assumed from any AWS account!

The Pacu “iam__enum_roles” module used a different approach, but like the user enumeration module, will enumerate a list of roles and attempt to assume them.

Misconfigured Github OIDC Role

resources

- https://cloudar.be/awsblog/finding-the-account-id-of-any-public-s3-bucket/

- https://github.com/WeAreCloudar/s3-account-search/

- https://rhinosecuritylabs.com/aws/exploring-aws-ebs-snapshots/

- https://rhinosecuritylabs.com/aws/aws-iam-user-enumeration/

- https://rhinosecuritylabs.com/aws/assume-worst-aws-assume-role-enumeration/

- https://rhinosecuritylabs.com/aws/aws-role-enumeration-iam-p2/

- https://blog.plerion.com/conditional-love-for-aws-metadata-enumeration/

About the author

Shaun is a Penetration Tester and Bitcoiner based in state controlled Britain, with over a decade in the Security Industry, specialising in Cloud and Infrastructure Security and regularly completing assessments for all manner of companies from global corporations to small charities and non profits.